Calibration of Measurement Instruments Precision In Practice

In advanced electrical engineering, measurement accuracy is foundational to system design, validation, and compliance. Whether you're characterizing high-speed digital interfaces, validating power integrity, or performing EMI diagnostics, the calibration of measurement instruments safeguards that your data is not only accurate—but also traceable, repeatable, and defensible.

At Tektronix, we view calibration not as a periodic obligation, but as a strategic enabler of engineering precision. This article explores the technical underpinnings of calibration, its impact on measurement uncertainty, and how Tektronix supports engineers working at the forefront of innovation.

What Is Calibration?

Calibration is the process of comparing the output of a measurement instrument (Device Under Test, or DUT) to a reference standard of known accuracy, typically traceable to the International System of Units (SI) through a National Metrology Institute (NMI) (e.g., NIST, PTB). The objective is to quantify and, if necessary, correct deviations from nominal values.

Formal Definition (per BIPM VIM):

“An operation that, under specified conditions, in a first step, establishes a relation between the quantity values with measurement uncertainties provided by measurement standards and corresponding indications with associated measurement uncertainties and, in a second step, uses this information to establish a relation for obtaining a measurement result from an indication.”

What Is the Purpose of Calibration?

The purpose of calibration extends far beyond simply checking whether an instrument is “working.” It is a foundational process that enables measurement accuracy, traceability, and confidence in every data point collected. For high-performance instruments like the Tektronix RSA5000 Series Spectrum Analyzer, which is used in applications ranging from EMI diagnostics to wireless protocol analysis, calibration plays a critical role in maintaining system-level integrity.

Let’s explore five essential purposes of calibration in detail:

-

Verifying Accuracy

At its core, calibration is about confirming that an instrument’s measurements align with known, traceable standards, within a defined tolerance. For a spectrum analyzer, this means ensuring that frequency, amplitude, and phase measurements are within the manufacturer’s specified and warranted tolerances.

For example, if the RSA5000 is used to measure spurious emissions in a 5G transmitter, even a 1 dB amplitude error could lead to a false pass or fail. Calibration verifies that the analyzer’s readings are accurate across its full frequency range—up to 26.5 GHz—so engineers can trust the results.

-

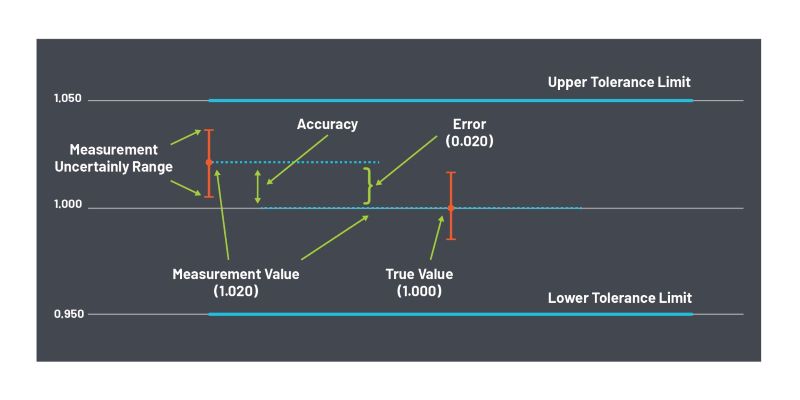

Quantifying Measurement Uncertainty

Every measurement has an associated uncertainty, which defines the range within which the true value is expected to lie. Calibration helps quantify this uncertainty by comparing the instrument’s output to a reference standard with a known uncertainty.

In the case of the RSA5000, a factory calibration performed on the equipment might reveal that the amplitude uncertainty at 2.4 GHz is ±0.5 dB. This information is critical when performing compliance testing, where regulatory limits are stringent and measurement margins are small. Without a quantified uncertainty, engineers cannot make informed decisions about pass/fail outcomes.

-

Ensuring Traceability

Traceability refers to the ability to link a measurement result back to the International System of Units (SI) through an unbroken chain of comparisons to national measurement standards or reference to fundamental physical constants. This is essential for maintaining consistency across labs, industries, and countries.

When Tektronix calibrates an RSA5000, it uses reference standards that are themselves calibrated against National Metrology Institute (NMI)-traceable sources. This ensures that the analyzer’s measurements are globally recognized and defensible in audits, certifications, and legal contexts.

-

Supporting Compliance and Audit Readiness

Many industries require documented proof that test equipment is calibrated and traceable. This is especially true in aerospace, defense, telecommunications, and medical device sectors.

Imagine a wireless device manufacturer using the RSA5000 to validate Bluetooth and Wi-Fi coexistence. During an ISO 9001 or FCC audit, the company must provide calibration certificates for all test equipment. A missing or expired certificate could result in non-compliance findings, production delays, or even product recalls. Regular calibration ensures that the organization is always audit-ready.

-

Enabling Corrective Action

Calibration doesn’t just confirm performance—it also identifies when an instrument deviates from acceptable tolerance levels. Communication of an out of tolerance measurement through a calibration report allows engineers to initiate corrective action before inaccurate measurements lead to costly errors.

For instance, if the RSA5000’s internal reference oscillator has drifted, it may result in incorrect center frequencies during spectrum sweeps. A calibration report can detect the drift and trigger a firmware-based adjustment or hardware repair. By catching the issue early through a calibration, an engineer avoids collecting invalid data and maintains the integrity of its test results.

Why Is Calibration Important?

Calibration is essential for ensuring that measurement instruments perform within their specified accuracy and reliability. For engineers working in high-precision environments, the implications of uncalibrated equipment can be significant—impacting product quality, regulatory compliance, and operational efficiency.

Here’s a deeper look at the three primary reasons calibration is critical:

-

Measurement Integrity

First and foremost, calibration safeguards measurement integrity. Over time, even high-quality instruments experience drift due to component aging, environmental exposure, or mechanical stress. This drift can compromise the accuracy of critical measurements. For instance, an RF engineer validating a 5G signal chain may rely on precise power level readings from a spectrum analyzer. If the analyzer has drifted by just 1 dB, it could falsely indicate that the device under test is non-compliant with emission standards, potentially leading to unnecessary redesigns or certification delays.

-

Regulatory and Quality Compliance

Calibration also plays a pivotal role in regulatory and quality compliance. Many industries are governed by strict standards—such as ISO/IEC 17025, AS9100, ISO 13485, and FDA 21 CFR Part 820—that require traceable calibration at defined intervals. Non-compliance can result in audit failures, fines, or product recalls. Consider a medical device manufacturer producing implantable cardiac monitors. These devices must be validated using calibrated oscilloscopes and DMMs. During an FDA audit, the company must present calibration certificates for all test equipment used in product verification. Missing or expired certificates could halt production or trigger a regulatory warning.

-

Instrument Longevity and Lifecycle Management

Finally, calibration supports instrument longevity and lifecycle management. It serves as a diagnostic tool that can reveal early signs of degradation, such as connector wear or thermal instability. This enables predictive maintenance and reduces the risk of unexpected failures. For example, a power electronics lab using high-voltage differential probes to measure switching transients in SiC-based inverters might discover during routine calibration that one probe exhibits increased noise and offset drift. Early detection allows the team to replace the probe before it causes erroneous data in a critical validation test, avoiding costly rework and preserving test integrity.

How Is Calibration Performed?

Calibration is a structured, multi-step process designed to ensure that a measurement instrument performs within its specified accuracy. For high-performance instruments like the Tektronix MSO54B, which offers up to 2 GHz bandwidth and 6.25 GS/s sampling rate, precision calibration is essential to maintain signal fidelity and compliance with industry standards.

Here’s how calibration is typically performed, step by step:

-

Stabilizing the Environment

Before calibration begins, the oscilloscope is placed in a controlled environment to minimize external influences. This includes stabilizing temperature (typically 23 ± 2°C), humidity, and electromagnetic interference. The MSO54B is allowed to warm up for at least 30 minutes to ensure thermal equilibrium, which is critical for accurate analog front-end behavior.

For example, if the oscilloscope was recently transported or stored in a cold environment, condensation or thermal gradients could affect internal timing circuits or voltage references. Stabilization ensures that all internal components are operating under nominal conditions.

-

Comparing DUT Readings to a Reference Standard

The oscilloscope’s vertical and horizontal measurement systems are then tested against traceable reference standards. These standards—such as precision voltage sources, time base generators, and signal generators—are themselves calibrated and thus linking the measurement result to a reference standard.

For the MSO54B, this might involve:

- Applying a known 1 Vpp sine wave at 1 MHz to verify vertical gain accuracy

- Using a 10 MHz time base reference to validate horizontal timing accuracy

- Checking trigger level thresholds and offset accuracy across all channels

Each measurement is compared to the expected value, and the deviation is recorded.

-

Calculating Measurement Error and Uncertainty

Once the raw data is collected, the calibration technician calculates the measurement error for each parameter. This includes both systematic errors (e.g., offset, gain drift) and random errors (e.g., noise, jitter).

For example, if the MSO54B reports a 1.0008 Vpp signal when the reference is exactly 1.0000 Vpp, the vertical gain error is +0.08%. This is then compared to the oscilloscope’s published specification (e.g., ±1.5% for vertical gain).

Measurement uncertainty is calculated using GUM-compliant methods.

-

Documenting Results and Determining Pass/Fail Status

All calibration results are documented in a calibration certificate, which includes:

- Measured values

- Reference values

- Calculated errors

- Measurement uncertainties

- Pass/fail status for each test point

For the MSO54B, this certificate might show that all channels passed within specification, but one channel is approaching the upper limit of acceptable offset error. This information is critical for engineers who rely on the instrument for high-precision measurements.

Tektronix provides digitally signed, metrological-traceable certificates that meet ISO/IEC 17025 requirements.

-

Adjusting the DUT if Necessary

If any parameter exceeds its allowable tolerance, the oscilloscope undergoes adjustment. For Tektronix instruments, this is performed using OEM-proprietary calibration software that is not available to third-party labs.

For instance, if the MSO54B’s time base is found to be drifting, the internal oscillator may be recalibrated using a high-stability 10 MHz reference. After adjustment, the instrument is re-tested to confirm that all parameters are within specification.

When Should Calibration Be Performed?

Determining the appropriate calibration interval for measurement instruments is a nuanced process—there is no universal standard that applies to all equipment or use cases. Instead, calibration frequency should be based on a combination of regulatory requirements, historical performance data, environmental conditions, and risk tolerance.

Key Considerations for Setting Calibration Intervals

Ask the following questions to determine the right interval for your test and measurement equipment:

-

Does your application or quality system mandate a specific interval?

Some industries (e.g., aerospace, medical, nuclear) may require calibration at fixed intervals to comply with ISO, FDA, or federal regulations.

-

What is the historical reliability of the instrument?

If an instrument has consistently remained in tolerance over multiple calibration cycles, you may consider extending the interval—provided you have sufficient data to support the decision.

-

How frequently is the instrument used?

Instruments used continuously or in mission-critical applications may require more frequent calibration than those used occasionally.

-

What is the required measurement accuracy?

Tighter tolerances and lower uncertainty requirements demand more frequent calibration to maintain confidence in results.

-

What are the environmental conditions?

Exposure to temperature extremes, humidity, dust, or vibration can accelerate drift and degrade performance.

-

What is the cost of calibration vs. the cost of failure?

Consider the potential impact of a measurement error—such as product recalls, compliance violations, or safety risks—when evaluating calibration frequency.

Initial Calibration

If calibration data is not provided, or not available at the time of purchase, it is best practice to secure an initial or baseline calibration before use. This establishes a reference point for future calibrations and can support warranty claims or quality audits. Retaining this baseline data also helps in determining long-term instrument stability.

Regulatory or Specified Requirements

Certain instruments—especially those used in regulated environments—have prescribed calibration intervals. For example, a Keithley 2400 SourceMeter, commonly used in semiconductor testing and precision electrical measurements, may be subject to calibration every 12 months or more frequently if used in a . These environments often require strict adherence to documented calibration schedules to ensure traceability and measurement integrity. Always verify whether your application is governed by such standards or quality system requirements.

Statistically Derived Intervals

In the absence of external mandates, many organizations begin with the OEM recommended calibration interval. Over time, this can be refined using statistical analysis of historical calibration data to determine the optimal interval based on actual instrument performance and risk.

This approach allows for:

- Interval extension for stable instruments

- Interval tightening for instruments with historical out-of-tolerance conditions

- Quantified reliability at the end of each calibration cycle

Manufacturer-Recommended Intervals

Most manufacturers provide a recommended calibration interval in the product’s operation manual. These are typically based on “typical use” scenarios and serve as a good starting point, especially for new or unfamiliar equipment.

Unscheduled or Event-Driven Calibration

Calibration should also be performed outside of the regular schedule if:

- The instrument is dropped, mishandled, or exposed to overload

- It is relocated to a new facility or environmental condition

- Measurement results appear inconsistent or suspect

- The instrument undergoes repair or component replacement

Final Thoughts

For engineers working in high-accuracy domains, the calibration of measurement instruments is not a formality—it’s a critical control point in the measurement system. Tektronix calibration services are designed to support your need for traceability, uncertainty control, and system-level confidence.

Explore Tektronix Calibration Services

Visit our calibration solutions page to learn more about our calibration programs or request a calibration service.