Introduction: The Challenges of cdmaOne BTS Testing

A communications network is only as good as the quality of its user access – customer satisfaction and operating efficiency begin and end with the link between the system and its subscribers. In wireless telecommunication systems, the Base Transceiver Station (BTS) provides the user access with its RF air interfaces to mobile devices. Since these interfaces are also the elements of the system that are most susceptible to faults and outside interference, they present a formidable challenge to those who install and maintain them.

Leading edge troubleshooting tools and techniques are essential to the maintenance of systems at peak performance. These modern testers provide clear insight into the complex transmitter systems by capturing and displaying information in formats that are easy to interpret. Testers must also be compact, dependable and rugged to withstand the rigors of field use. Most of all, portable troubleshooting tools must get the job done quickly and efficiently – zeroing in on problems and minimizing service disruptions and time spent off-line.

The stakes are high. Network systems must perform reliably in the face of fierce competition – success or failure will be the direct result of customer satisfaction. Sophisticated new technology must coexist in a complicated landscape of previous generations and new mobile systems – most of which must be supported for many years to come. Exponential growth in other wireless and RF devices is introducing new sources of noise and interference that threaten to degrade performance.

This application note addresses the most common measurement challenges faced by RF technicians and engineers who maintain cdmaOne base station equipment. It explains the fundamental concepts of key transmitter signals, examines typical faults and their consequences and offers guidelines for tests to verify performance and troubleshoot problems in the field. Reference information is also provided on the differences between cdmaOne and previous analog and 2G digital standards and the evolution to new 3G systems.

We'll begin with a brief overview of analog and digital multiplexing methods and a review of key CDMA signal parameters. We will then examine the various types of testing tools used to characterize those parameters. In this document there is a Test Notebook that provides summaries and guidelines for making key transmitter measurements at an operating cdmaOne base station. For a brief history of wireless telephony please see Appendix (page 11)

CDMA Basics

What are CDMA and cdmaOne?

Code Division Multiple Access (CDMA), as defined in Interim Standard 95 (IS-95), describes a digital air interface standard for mobile equipment that enhanced the capacity of older analog methods with greatly improved transmission quality. "cdmaOne" is the brand name1 for the complete wireless telephone system that incorporates the IS-95 interface. CDMA systems were serving over 65 million subscribers worldwide by June, 2000.2

CDMA has proven itself as a successful wireless access technology in 2nd generation networks. Furthermore, the evolving third generation systems will rely on CDMA techniques for radio access. However, the structure of the physical layer of a cdmaOne network is significantly different than its GSM or IS-136 counterparts.

Characteristics of cdmaOne Signals

The digital technologies in the cdmaOne BTS introduce a significant number of new measurement parameters for the operation and maintenance of a network. In analog systems, we measure parameters such as signal-to-noise ratio and harmonic levels, in order to quantify how well a base station is performing on a network. In cdmaOne systems, these familiar analog parameters are often mixed with or replaced by new quality metrics such as Pilot Time Tolerance (Tau) and Waveform Quality.

Standards: Within the United States, the Telecommunications Industry Association (TIA) has written Interim Standard IS-95 for CDMA mobile/base station compatibility and IS-97 for CDMA BTS transmitter and receiver minimum standards. Standards maintained by the Association of Radio Industries and Business/Telecommunication Technology Committee (ARIB/TTC) in Japan and Telecommunications Technology Association (TTA) in South Korea describe similar tests for BTS performance.

Walsh Codes: "Walsh code" is the term used for the digital modulation code that separates the individual conversations and control signals on the RF carrier being transmitted from a cdmaOne base station. This code uniquely identifies each of the forward traffic channels (user conversations). There are 64 possible Walsh codes; each code is 64 bits long. In cdmaOne, the only way to address individual user channels in a transmission is to demodulate the RF signal and detect their individual Walsh codes.

1 cdmaOne is a registered trademark of the CDMA Development Group (CDG)

2 CDMA Development Group (CDG), Subscriber Growth History, http://www.cdg.org/world/cdma_world_subscriber.asp

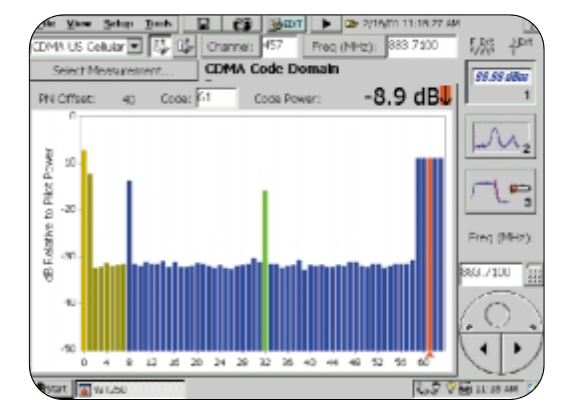

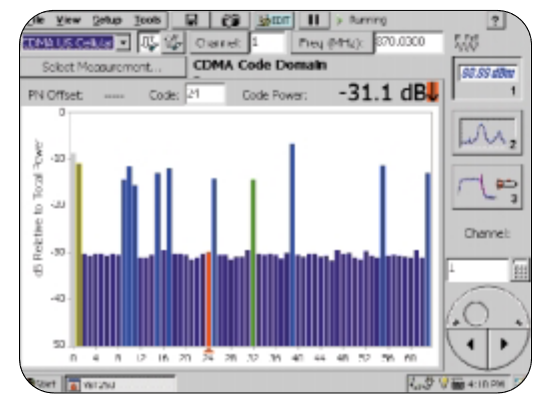

Many of the measurements we will discuss in this application note depend upon looking at a CDMA signal in the "Code Domain," data in individual code channels obtained from a demodulated signal. Code domain power, for instance, is a graph of the power levels in each of the 64 Walsh codes. In Figure 1, the power is graphed on the vertical axis and the 64 Walsh codes (Channels 0 to 63) are displayed on the horizontal axis. In this example, power levels in codes 0, 1, 8, 32, 59, 60, 61, 62, and 63 indicate that they are carrying traffic or system data of some sort.

Pilot, Paging, Sync and Traffic Channels are forward link channels – logical channels sent from a base station to a mobile phone at any given moment. Pilot, paging and sync channels are "overhead," or system channels that are used to establish system timing and station identity with mobiles and to manage the transmissions between them. Traffic channels carry individual user conversations or data.

The Pilot signal serves almost the same purpose as a lighthouse; it continuously repeats a simple spread signal at high power levels so that mobile phones can easily find the base station. The Pilot signal is usually the strongest of the 64 Walsh channels, and is always on Walsh code 0. Paging channels can be found on one or more of Walsh codes 1 to 7. These channels are used to notify mobile transceivers of incoming calls from the network and to handle their responses in order to assign them to traffic channels. The synchronization, or Sync, channel is always found on Walsh code 32. This channel carries a single repeating message with timing and system configuration information from the cdmaOne network. Finally, a Traffic channel is a forward link channel that is used to carry a conversation or data transmission to a mobile user.

The Reverse CDMA Channel carries traffic and signaling in the mobile-tostation direction. An individual reverse channel is only active at the BTS during calls or access signaling from a mobile.

Chip: The term "chip" is used in CDMA to avoid confusion with the term "bit." A bit describes a single digital element of a digitized user conversation or data transmission. Since data is "spread" before being transmitted via RF, the term "chip" represents the smallest digital element after spreading. For example, one version of cdmaOne equates 128 chips to one bit.

Short Code and PN Offset: Each base station sector in a cdmaOne network may transmit on the same frequency, using the same group of 64 Walsh codes for pilot, paging, sync and forward traffic channels. Therefore, another layer of coding is required so that a mobile phone can differentiate one sector from another.

The PN offset plays a key role in this code layer. The abbreviation "PN" stands for pseudo-random noise – a long bit sequence that appears to be random when viewed over a given period of time, but in fact is repetitive. In cdmaOne transmissions, the entire PN sequence is defined to form a short code that is 32,768 chips in length and repeats once every 0.027 seconds. The short code is exclusive OR'd with the data and transmitted in each of the forward channels (pilot, paging, sync, and traffic). Within the 32,768 chip sequence, 512 points have been chosen to provide PN offsets. Each base station transceiver uses a different point in the sequence to create a unique PN offset to the short code in its forward link data. As a result, a mobile phone can identify each base station sector by the PN offset in the received signal.

Basics of Testing cdmaOne BTS RF Signals

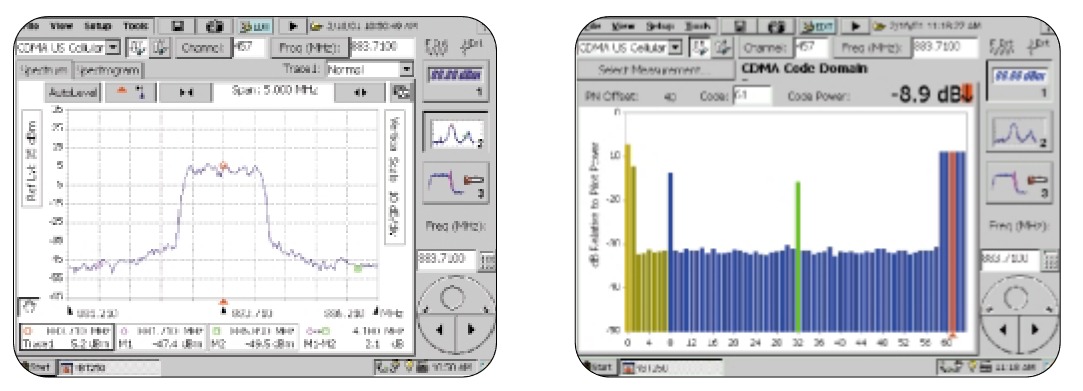

What Does a cdmaOne Transmission Look Like? Figure 2 shows what a typical cdmaOne BTS transmission looks like in both the frequency and code domains. The data from the system as well as users is coded, spread and combined for transmission. This is then modulated onto an RF carrier, with a channel bandwidth of 1.2288 MHz. The code domain picture at right is simply the demodulated data from the frequency domain picture at left.

What Kinds of Tools are Specified? cdmaOne standards specify certain types of traditional measurement tools to be used in checking the RF signal performance of a BTS transmitter. These tools include a spectrum analyzer, a power meter, a mobile station simulator and a waveform-quality/code-domain power measurement device. We will examine each of these tools as if it were a separate instrument, even though the trend in new test equipment is to combine many of these functions into a single package.

Why Are All of These Tools Specified?

CDMA signals are complex. In order to characterize them completely, many different types of parameters must be tested – each of which has been specified in terms of a specific type of test tool. Each of the traditional test tools is well suited for making certain types of measurements, but may not be appropriate for others. Table 1 lists the common types of traditional tools and some of the measurements made with each.

Power Meters:

| Measurement Tools | Sample Applications |

| CDMA Waveform Analyzer | Waveform quality of transmitter (Tx) Frequency error (Tx) Pilot time tolerance (Tx) Code domain power (Tx) |

| Spectrum Analyzer | In-band spurious emissions (Tx) Out-of-band spurious emissions (Tx) General RF interference checks |

| Power Meter | Base station total output power (Tx) |

Table 1. CDMA Test Equipment

A power meter is a broadband device that has the ability to make very accurate, repeatable measurements of total output power of a base station. It is portable and relatively easy to use. Unfortunately, the power meter alone may not catch many CDMA BTS power problems that are related to individual frequencies or code channels. Two power meters testing separate base stations could show a slight difference in the readings of total power, but not enough to indicate a severe malfunction. Yet, one of these base stations could be experiencing dropped calls, while the other is not. There are a number of possible causes for poor performance that require additional tools to systematically find the problem and identify a solution. For example, the cause of a network disturbance may not be a single base station (or sector of a base station). Instead, an external source, such as a competing provider "leaking" into unassigned spectrum or a lower frequency RF signal with severe harmonic problems, may be interfering with the signal. In that case, a frequency selective spectrum analyzer is required to pinpoint the nature of the interference.

Spectrum Analyzers

A spectrum analyzer is a "frequency selective" power meter that displays power levels on the vertical axis versus frequency on the horizontal axis. While a power meter may yield a more accurate reading of absolute power level over a broad range of frequencies, a spectrum analyzer allows the user to more accurately determine power levels at each of the frequencies of interest. A spectrum analyzer can help troubleshoot problems such as interference and spurious signals being transmitted from a base station or by external sources.

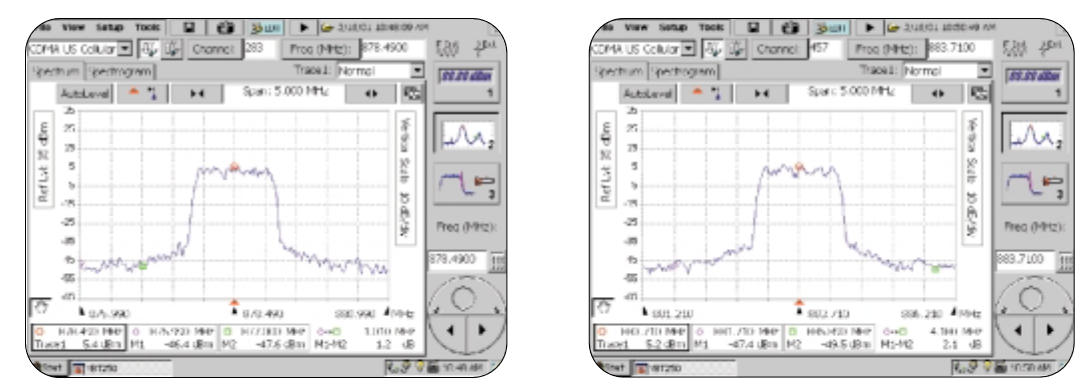

Figure 3 shows two images from a spectrum analyzer. Neither image would seem to indicate a severe problem with the base station sector being analyzed. The base station on the left, however, could be missing a sync channel and therefore would not hold up a call. There is no way to detect this type of problem with either a power meter or a spectrum analyzer – this kind of modulation problem calls for the third major type of test equipment: the CDMA Waveform or "Code Domain" Analyzer.

CDMA Waveform/Code Domain Analyzers

The CDMA waveform analyzer tunes to the transmission frequency of the base station sector and demodulates the signal. The demodulation process allows the waveform analyzer to display the performance of each of the individual Walsh code channels of the base station sector. The analyzer can also determine the timing and frequency error of the base station signals.

Measurement Challenges

This section describes the key transmitter measurements for installing and maintaining a cdmaOne base station. The tests are specified in TIA Interim Standards IS-95 and IS-97. Similar tests are specified in ARIB/TTC standards in Japan and TTA standards in South Korea.

Complex characteristics in multiple domains

CDMA signals are far more complex than those found in analog systems. Sophisticated code domain modulation and spread-spectrum schemes, handoff control and power management functions are but a few of the new technology challenges. Overall power level and frequency versus time measurements alone cannot verify performance or isolate problems in these new systems. We now must measure parameters such as PN offsets, timing, power levels and code performance over time.

New sources of error and interference

New sources of interference and noise are resulting from the higher carrier frequencies and coexistence with competing mobile systems as well as exponential growth in other wireless and RF devices such as GPS systems and wireless LAN's. Errors can become significant in any or all of the time, frequency and code domains – so all of them must be analyzed.

New Test Solutions Improve Productivity

Simple, general-purpose test tools cannot measure all of the important parameters in a CDMA RF system. We need to have much more sophisticated tools that can demodulate the signals and address multiple parameters and domains.

Full featured, laboratory instruments used for design, development and compliance testing of wireless devices are very complicated to operate, big, bulky and expensive – they are not appropriate for the rigors of daily field use.

A new breed of portable test system has been developed for the specific purpose of troubleshooting BTS performance in the field. These multi-function testers combine the key characteristics of the separate instruments described above into a single tool that can analyze the most important parameters of BTS RF signals. Unlike their general-purpose predecessors, field portable BTS test tools provide standard measurement functions that can be executed at the push of a button. Even novice users can acquire and display results on large LCD screens quickly and reliably.

These portable BTS testers are not intended for full compliance testing. They bring speed, efficiency and simplicity of operation to the process of trouble-shooting – without the cost and complexity of meeting strict accuracy requirements of conformance standards. They are battery operated, compact, lightweight and extremely rugged for use in harsh conditions.

Some of these new testers are also modular and able to test multiple standards. As multiple mobile systems (CDMA, GSM, AMPS, etc) are deployed in the same area, or even on the same BTS site, the ability of a single tester to isolate multiple sources of interference from those systems is a major benefit.

Conclusion

This release of the cdmaOne BTS Troubleshooting Application Note offers information for the RF technicians and engineers who install and maintain systems in the new world of wireless telecommunications. Updates will follow in the near future, as the technology and standards continue to evolve. This document is also available at our web site (www.tektronix.com), along with updates and related documents. Tektronix is committed to the most advanced test solutions for telecommunication networks. As mobile networks continue to evolve, we will keep you in the forefront with the latest measurement products and methods.

We welcome your comments and suggestions for improving this document and your ideas for developing other tools to help you meet the measurement challenges of new wireless systems. Contact us at the locations listed below, or through our web site.

Test Notebook: Common cdmaOne BTS Measurements

The following sections offer guidelines for tests to verify BTS RF performance and solve problems

1.0 Transmitter Frequency Error

Standard: IS-97 4.1.2

What is being measured?

This measurement determines the difference between the actual transmitted carrier frequency of a base station sector and the designated frequency.

Why do I need to test this?

If a transmitted signal is slightly "off-center" from a designated frequency, the faulty signal may interfere with neighboring transmissions.

What are the consequences?

Frequency errors degrade the overall quality of service and may pollute neighboring systems' operations.

How is frequency error measured?

The test set evaluates the CDMA signal, determines the characteristic frequency of the signal and compares it with the desired frequency to determine the error. In the standards, this test is one of the group of "waveform quality" measurements that should be performed with the BTS transmitting only a pilot signal. Since that would require taking the base station off-line and disrupting service, test sets have been designed to make the measurements during normal operating conditions in the presence of multiple active signals and Walsh codes.

Note: When the tester uses the BTS reference frequency as its standard, it can only verify the error of the transmitted frequency versus that reference, not any error in the reference itself.

What is the specified limit?

IS-97 specifies a frequency error of less than +5 x 10–8 (0.05 ppm) of the frequency assignment. This translates to approximately +45 Hz for cellular and +90 Hz for PCS frequencies.

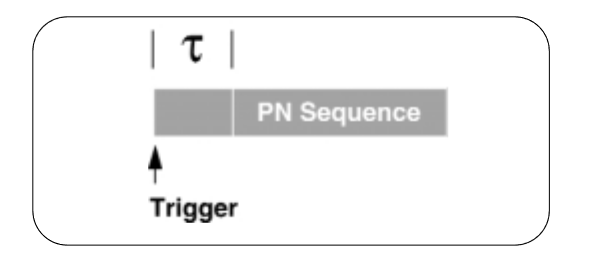

2.0 Pilot Time Tolerance (Tau)

Standard: IS-97 4.3.1.1

What is being measured?

The difference between the transmitted start of the PN sequence of the pilot Walsh code and a system-based external trigger event is measured to determine the Pilot Time Tolerance (see Figure 4).

Why do I need to test this?

All base stations must be synchronized within a few microseconds for station ID mechanisms to work reliably. cdmaOne networks use a Global Positioning System (GPS) to maintain system time. This fundamental measurement ensures that the pilot signal for any given sector of a base station is "tracking" with the network's system time.

What are the consequences?

Deviation from network timing could result in dropped calls and missed handoffs, since the faulty base station timing would not match the timing on the remainder of the system's base stations.

How is pilot time tolerance measured?

Transmitter output from the base station is demodulated in order to determine the start of the pilot PN sequence. The test set uses the "even second clock" signal (available on all base stations) as the external trigger reference for zero offset. Taking into account the programmed PN offset, the test set then calculates the difference between the time of the trigger and the time of the pilot PN sequence and reports that difference as a single time tolerance value expressed in microseconds. This measurement is another of the waveform quality standards that suggest a pilot-only transmission from a base station. In practice, most testers are able to make the measurement of pilot time-alignment error on a live mixed signal with paging, sync and traffic Walsh codes active, avoiding any disruption of service.

What is the specified limit?

IS-97 specifies that the pilot time-alignment error must be less than 10 microseconds. Both specifications also state that the value should be less than 3 microseconds.

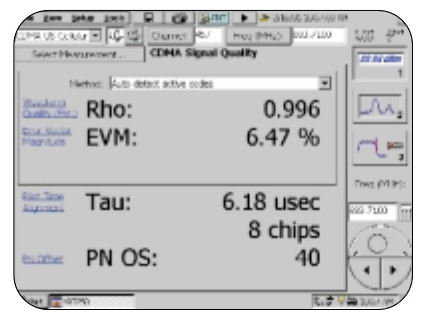

3.0 Waveform Quality (rho)

Standard: IS-97 4.3.2

What is being measured?

Waveform quality, often referred to as rho (ρ), is perhaps the most common measurement for CDMA systems. Rho is a correlation that represents how closely the transmitted signal matches the ideal power distribution for a CDMA signal (see Figure 5). Think of the tested signal power distribution as the numerator of a fraction and the ideal distribution as the denominator – a waveform quality constant of 1.0 would represent a perfectly correlated CDMA signal.

Why do I need to test this?

This measure is a good tool for judging the modulation quality of the transmitted CDMA signal and offers a quick and easy "snapshot" of the overall performance of the system.

What are the consequences?

Deviations in code power distribution degrade system performance for the user and lower efficiency for the operator.

How is waveform quality measured?

The test set demodulates the transmitter output from the base station and compares the distribution of power received to the ideal power distribution at specified "decision points" in the CDMA transmission. IS-97 specifies that this measurement is to be made on a pilot-only forward link signal. Furthermore, the test is to be made over a sample period of at least 1280 chips (see Figure 6). In practice, a pilot-only transmission from a base station implies that the base station has been removed from service. Most testers now offer an "estimated rho" capability that allows the factor to be derived in the presence of multiple Walsh codes – the approximate rho value can be calculated without taking down a sector of the base station.

What is the specified limit?

IS-97 specifies that the waveform quality constant be greater than 0.912.

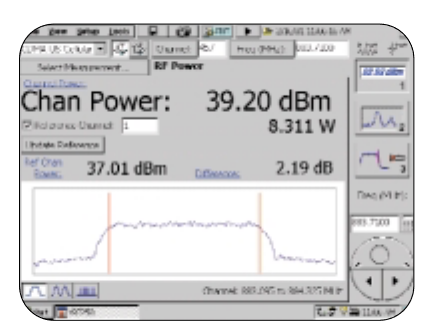

4.0 Total Power, or Channel Power

Standard: IS-97 4.4.1

What is being measured?

This measurement verifies the total output power from the base station or appropriate sector of the base station (see Figure 7).

Why do I need to test this?

Power is the fundamental measurement of BTS range and performance.

What are the consequences?

Overall power levels must be contained within limits to prevent interference among neighboring stations while maximizing coverage.

How can total power be measured?

The unique composition of a CDMA signal makes the measurement of power more demanding than with other communications systems. The spread-spectrum signal has the appearance of a "noise-like" signal spread over 1.23 MHz bandwidth, but it can contain much higher peak-to-average power ratios than a noise signal, making it very difficult to derive the true RMS values with some types of test equipment.

Total power can be measured directly with a dedicated thermal power head meter. Spectrum analyzers have been used to make this measurement by summing the power measurements across the 1.23 MHz wide CDMA bandwidth. However, most spectrum analyzers assume that inputs are CW signals, so power measurements of CDMA signals can be 9 dB or more in error, depending upon which Walsh codes are active. Most CDMA BTS testers now use well-defined DSP sampling techniques to measure power with appropriate RMS computations. IS-97 specifies a base station signal configuration of pilot, paging, sync and six traffic channels active for this measurement (see Table 2).

| Code Type | Number of Channels | Power (dB) | Comments |

| Pilot | 1 | -7.0 | Code channel 0 |

| Sync | 1 | -13.3 | Code channel 32, always 1/8 rate |

| Paging | 1 | -7.3 | Code channel 1, full rate only |

| Traffic | 6 | -10.3 | Variable code channel assignments;full rate only |

Table 2. Nominal Testing Model (from IS-97)

What is the specified limit?

IS-97 specifies that the total power shall remain within +2 dB and –4 dB of the specified base station (or sector) power.

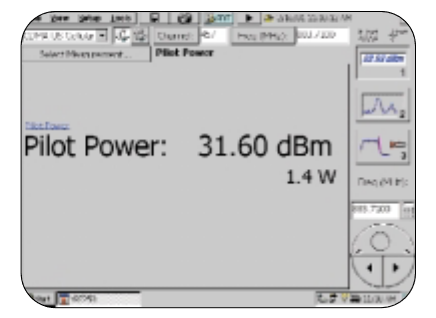

5.0 Pilot Power

Standard: IS-97 4.4.3

What is being measured?

The ratio of power in the pilot channel to the total channel power transmitted (see Figure 8).

Why do I need to test this?

Pilot power is a measure of the BTS range to mobile devices.

What are the consequences?

A pilot power level that deviates substantially from desired values, can affect the coverage characteristics of the network.

How is pilot power measured?

The test equipment demodulates the transmitted signal to analyze power levels in the code domain. The power contained in Walsh code 0 is then reported in dBm and watts.

What is the specified limit?

IS-97 specifies that the pilot power shall remain within ±0.5 dB of the BTS sector configuration value.

6.0 Code Domain Power

Standard: IS-97 4.4.4

What is being measured?

In a CDMA system, individual user transmissions are isolated by their codes. The test equipment measures the ratio of power in each of the forward link Walsh codes, to the total transmitted channel power. Figure 9 shows a graph of the Code Domain.

Why do I need to test this?

A base station's ability to accurately control the power in individual Walsh codes is a prerequisite to properly handle multiple user links with varying RF losses and to ensure interference-free transmissions.

What are the consequences?

Loss of quality and channel capacity due to inadequate or unbalanced power.

How is code domain power measured?

The test equipment demodulates the transmitted signal to analyze power levels in each of the 64 forward link Walsh codes. The power in each of the codes is expressed in dB relative to the total transmitter power in the channel. Code domain power is verified with the base station sector producing a combination of Pilot, Sync, Paging and six traffic channels (see Table 2 in Section 4.0). The level of the inactive Walsh codes (those forward codes without an overhead or traffic signal), can be compared against the standard, as well.

What is the specified limit?

IS-97 specifies that the code domain power in each inactive Walsh code shall be 27 dB or more below the total output power.

Appendix

A Brief History of Wireless Telephony

The public wireless telephony business has seen a number of significant changes throughout its fifty-year life. The first systems offering mobile telephone service (car phone) were introduced in the late 1940s in the United States and in the early 1950s in Europe. Those early single cell systems were severely constrained by restricted mobility, low capacity, limited service and poor speech quality. The equipment was heavy, bulky, expensive and susceptible to interference. Because of those limitations, less than one million subscribers were registered worldwide by the early 1980s.

First Generation (1G): Analog Cellular

The introduction of cellular systems in the early 1980s represented a quantum leap in mobile communication (especially in capacity and mobility). Semiconductor technology and microprocessors made smaller, lighter weight and more sophisticated mobile systems a practical reality for many more users. These "1G" cellular systems carry only analog voice or data information. The most prominent 1G systems are Advanced Mobile Phone System (AMPS), Nordic Mobile Telephone (NMT) and Total Access Communication System (TACS). With the 1G introduction, the mobile market grew to nearly 20 million subscribers by 1990.

Second Generation (2G): Multiple Digital Systems

Since 1990, the industry has been rapidly shifting from analog to digital communications standards. "2G" digital systems introduced smaller handsets, enhanced services and improved transmission quality, system capacity and coverage. 2G cellular systems include GSM, IS-136 (TDMA), cdmaOne and Personal Digital Communication (PDC). By 1998, multiple 1G and 2G mobile communications systems were serving hundreds of millions of cellular subscribers, worldwide. Speech transmission still dominated the airways, but the growing demands for fax, short message and data transmissions called for further enhancements to the systems.

Evolution to the 3rd Generation: 2.5G and IMT-2000

The phenomenal growth of wireless technology has created a complex landscape of services and regional systems. Different standards serve different applications with different levels of mobility, capability and service area (paging systems, cordless telephone, wireless local loop, private mobile radio, cellular systems and mobile satellite systems). In 1998, standards developing organizations (SDO's) around the world addressed the multiplicity of standards and performance limitations in existing systems with definitions of a new 3rd Generation (3G) of standards.

The objective of 3G standards, known collectively as IMT–2000, was to create a single family of compatible definitions that have the following characteristics:

- used worldwide

- used for all mobile applications

- support both packet-switched (PS) and circuit-switched (CS) data transmission

- offer high data rates up to 2 Mbps (depending on mobility/velocity)

- offer high spectrum efficiency

IMT stands for International Mobile Telecommunications and "2000" represents both the year for initial trial systems and the frequency range of 2000 MHz. In total, proposals for 17 different IMT–2000 standards for various aspects of wireless systems were submitted by regional SDO's to International Telecommunications Union (ITU). All 17 proposals were refined and adopted by ITU and the specification for the Radio Transmission Technology (RTT) was released at the end of 1999. 3G systems are expected to be phased in over the coming ten years and will coexist with 1G and 2G systems in most locations.

As the wireless world evolves into 3G, existing systems continue to be enhanced with the introduction of IS-95 revisions to add intelligent network data transmission services and to improve the performance of both voice and data transmissions.

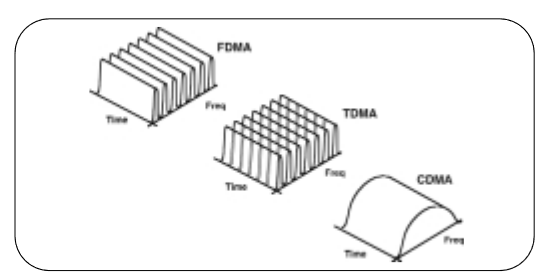

Access Methods for Wireless Communications

Subscriber access to each of the wireless cellular systems is provided by one of several types of RF transceiver systems. In analog cellular systems, such as AMPS, each user occupies a unique transmit and receive frequency, a method known as Frequency Division Multiple Access (FDMA). In digital Time Division Multiple Access (TDMA) systems, such as GSM, individual users occupy time slots on a given frequency; each user has a unique period of time for receiving the transmission from the base station. In Code Division Multiple Access (CDMA) digital systems, each user is assigned a unique digital code that is used to modulate the RF carrier with multiple users, sharing the same transmission signal in any given period. Diagrams of each method are shown in Figure 10.

In analog FDMA systems, the user occupies one frequency channel (30 kHz bandwidth for AMPS) for transmission and another for reception. These transmit/receive channels are dedicated for the duration of a phone call, so they are unavailable for further use until the call has been completed. During peak hours, subscribers are often unable to access the system, which results in lost revenue for a network operator and frustration for the user.

Digital systems, such as TDMA and CDMA, offer far greater efficiency than FDMA. In both methods, the caller's speech is converted to a digital bit stream that can be more easily manipulated and compressed to increase capacity and improve utilization. TDMA systems subdivide a given frequency channel bandwidth into time slots. This compression allows more conversations to occupy the same frequency space. In IS136, for example, a 30 kHz frequency bandwidth is divided into multiple time slots with each slot allocated to a specific user. In this way, multiple users can share the same duplex pair simultaneously. IS-136 and GSM are popular systems that use a TDMA access method.

CDMA systems use a much broader bandwidth than either FDMA or TDMA systems. Instead of dividing users by frequency or time slot, the system assigns a unique digital code to each user and transmits that code along with the conversation. When the receiver applies the correct code, the appropriate conversation of that user is extracted and reconstructed. In a CDMA system, multiple users' signals occupy the same RF frequency band at the same time. The layer of unique user codes is used to identify each of the multiple users in the transmission.